In this blog, I’ll revisit and write a follow-up article on a blog I wrote 6 years ago. In the first part, I used some basic machine learning algorithms to determine the similarity between products with different attributes. I used Hamming Distance for product features, Euclidean Distance for product price and Jaccard Similarity Coefficient for product categories. If you still haven’t checked out the blog, check it out [here].

For this article, we’ll continue on the same track of building a product recommender system. However, this time we’ll not use any of those product attributes or features that we used in the first part. We’ll instead use only the product description text of the products and see how much information we can extract from just text, using Natural Language Processing (NLP).

When I wrote the first part in March of 2019, GPT and BERT were still babies less than a year old. In recent years, there have been vast improvements and huge accomplishments in the AI field. It’s hard to find anything that’s not AI-driven och AI-enhanced these days. AI can be very advanced and complex and for many or most use cases, it makes sense to use external services instead of implementing things on your own.

The example of NLP usage we’re covering in this blog involves comparing and calculating similarity scores between text documents. We’ll keep it simple and won’t go into depth too far, so it’ll be quite straightforward to implement and set up on your own. Let’s get started by going through what we’re looking into in more detail.

Methodology

In part 1, we had product images and some product attributes, but no product description. So, I started out by feeding the 10 product images from the first part through LibreChat to generate 10 text files with product descriptions, every document containing 1000 words each.

Now that we have generated product descriptions, we’ll use three different methods to compare these documents with each other to see which is the most accurate and efficient method.

1. Bag of Words (BoW)

With BoW, you start by taking all the unique words from all of the documents, creating a dictionary. Once you have the dictionary, you continue by counting the occurrences of all words in each document. This will create a sparse vector that can be used to compare similarities between documents.

2. Term Frequency-Inverse Document Frequency (TF-IDF)

TF-IDF is a technique combining two methods, Term Frequency and Inverse Document Frequency. Term Frequency (TF) calculates how many times a word appears in a document. While the Inverse Document Frequency (IDF) part goes through all the documents in the corpus, weighting words by importance based on their frequency. This will, in contrast to BoW, give a higher score of similarity between words that are less common in the corpus, making it more accurate.

3. SBERT models

These are models that have been trained and can create text embeddings, giving semantic meaning to sentences by creating dense vectors. In this example, we’ll use the “all-mpnet-base-v2” model that creates a 768 dimension vector and the smaller model “all-MiniLM-L6-v2” which creates a 384 dimensions vector that should be about 5 times faster, requiring less time and resources to generate vectors.

I’ve created a command to run these three methods through all of the 10 product description texts and generate vectors for them stored in an external JSON file. For BoW and TF-IDF, the logic is quite simple so I wrote a few functions that will create the vectors in PHP. For the SBERT models, you’d have to use Python and since I haven’t got the highest proficiency in Python, I asked ChatGPT to create a Python script that does the same as the PHP logic; storing the generated vectors in external JSON files.

Preprocessing

Before generating vectors, we need to do some preprocessing of the product description texts. The techniques we’ll use are Normalization, Tokenization, Removing Stopwords and Stemming.

1. Normalization

When I normalized the documents, I removed everything that wasn’t a letter by using the regex /\P{L}/. p{} and P{} are regex unicode properties that can be used to match or reject a set of values. After normalizing the text with regex, any kind of whitespace or invisible separator, as well as, invisible control characters and unused code points and numbers will be removed.

2. Tokenization

There are different methods to tokenize the text, but essentially what it is, is splitting up text into a series of words. We’ll keep it basic and simply explode the text by every whitespace explode(' ', $document). This will work well since we’ve already normalized the text in the previous step.

3. Removing Stopwords

Stopwords are common words that don’t contribute to the meaning of the text, like “the”, “a”, “is”, “and” etc. that are removed to get better results when comparing the texts. I found this text file with stopwords for the English language on GitHub that contains 1300 words. There are also stopwords available for other languages. If you’re interested, check them out [here].

After we have the list of stopwords, simply run an array_filter over the words to remove them like so array_filter($words, fn ($word) => ! in_array($word, $stopwords, true))

4. Stemming (Lemmatization)

Stemming means that you take away the last part of a word to remove duplicate words that all conjugate from the same base word. This is to reduce the size of the dictionary but also to make similarity scores more accurate. So for example, “Improve, Improving, Improvements, Improved” would all be shortened to the word “Improv”.

There’s another similar technique that’s more resource intensive but creates a more accurate root version of the word and that’s lemmatization. With lemmatization, the context of the text is also taken into consideration, so for example, with stemming, Caring would be Car, while with lemmatization, Caring would be shortened to Care. In the preprocessing examples we’re setting up in this blog, we’ll use the simpler version for creating root words, stemming.

Processing

Now that we have our vectors stored in our JSON files, we’ll loop through them and calculate the similarity by using the formula for Cosine Similarity. As a reference, generating the vectors and storing them in external JSON files, took about this much time on my local machine.

10 documents x 1000 words = 10.000 words

----------------------------------------

all-mpnet-base-v2: 2776.87 ms

all-MiniLM-L6-v2: 410.70 ms

BoW: 56.55 ms

TF-IDF: 6.45 msCalculating cosine similarity for these few vectors isn’t a resource-intensive procedure, so we’ll simply calculate the score inside the controller before displaying the view. However, these similarity scores could of course also be stored in external files, a database or a cache system.

Here’s the class that I’m using to calculate the similarity score.

<?php declare(strict_types=1);

namespace App\Nlp;

class Compare

{

public function cosineSimilarity(array $vectorA, array $vectorB): float

{

$dotProduct = 0.0;

$magnitudeA = 0.0;

$magnitudeB = 0.0;

foreach ($vectorA as $key => $valueA) {

$valueB = $vectorB[$key] ?? 0;

$dotProduct += $valueA * $valueB;

$magnitudeA += $valueA ** 2;

$magnitudeB += $valueB ** 2;

}

// calculate magnitude (length) of the vectors

$magnitudeA = sqrt($magnitudeA);

$magnitudeB = sqrt($magnitudeB);

return ($magnitudeA * $magnitudeB) ? $dotProduct / ($magnitudeA * $magnitudeB) : 0.0;

}

}Results

Now that we’ve got all the similarity scores calculated from the generated vectors, let’s have a look at the result.

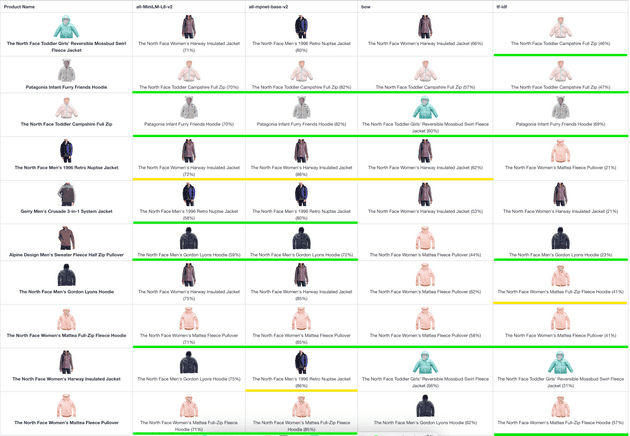

After displaying the results, I went through and scored the result depending on how accurate I thought that the recommendation was. The products that got underlined with green got 10 points and were in my opinion a good recommendation. The products underlined with yellow, either got the target group right or the product type right and was a fair recommendation, so I gave them 5 points each.

After summing up all the scores, this is what we got:

all-mpnet-base-v2: 70/100

all-MiniLM-L6-v2: 65/100

BoW: 35/100

TF-IDF: 65/100Conclusion

I found that making product recommendations solely by comparing product description texts is quite difficult. To make more accurate recommendations, you would need more information about the product, like product attributes, product categories, product features, target group and so forth.

Also, if the customer has made previous purchases, this is also data that can be taken into consideration to create item-based collaborative filtering. Another dimension could also be added by comparing what other customers with similar purchase patterns bought to make cross-user recommendations with user-based collaborative filtering.

Going back to the results, I was surprised at how well TF-IDF recommended products although it isn’t based on a Large Language Model (LLM) like the SBERT models are.

If you to a certain extent know what the documents you’re comparing contain and you know what kind of keywords you want to highlight, then the TF-IDF keyword extraction can be made even more accurate by adding weight to certain keywords. I’ve added logic to the TF-IDF vector generator function that I wrote in PHP to enable it to give more weight to certain keywords.

// add 4 times weight to these keywords

$weightedWords = [

'woman' => 4

'women' => 4,

'man' => 4,

'men' => 4,

'toddler' => 4,

'infant' => 4

];

$processor->calculateIdf($documents, $weightedWords);When calculating the similarity score, you could experiment with adding another vector of text into the mix, like the product title. Here’s an example of how you could calculate the similarity score where 30% of the weight is on the product description text and 70% of the weight is on the product title.

($compare->cosineSimilarity($descriptionVector1, $descriptionVector2) * 0.3)

+ ($compare->cosineSimilarity($titleVector1, $titleVector2) * 0.7)That’s it for this time, I hope that this will be useful for someone out there looking to get more insight into using NLP to compare and calculate similarity between documents to create a recommender system.

As always, I’ve uploaded all the code that I use in these examples on GitHub, if you’re interested, check out the repository [here].

Until next time, have a good one!